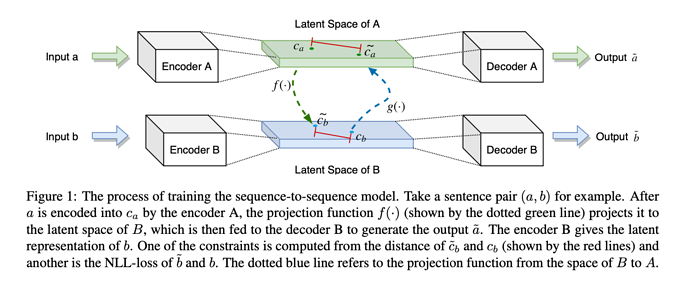

Supervised and unsupervised style transfer are often regarded as two independent paradigms, this paper proposed a semi-supervised method to bridge the gap between them. They train 2 S2S models in each style then learn 2 project functions between the latent space of the 2 encoders. The S2S models are denoising auto-encoders which require no parallel corpus. Once encoders are trained in each style, their hidden states are projected into the latent space of their counterparts under two criteria:

-

For parallel sentence pairs (a, b) , the Euclidean distance between the original representation of b and projected hidden states of a in encoder B should be small.

-

The probability of reproducing b giving projected a should be large.

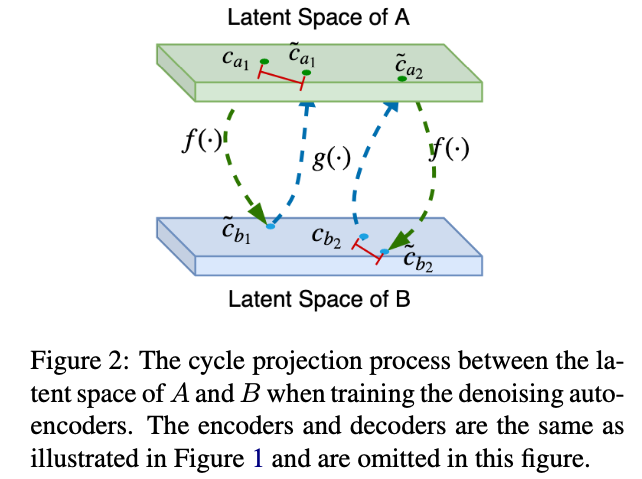

To exploit non-parallel corpora, they propose a cycle projection method inspired by back-translation in machine translation, which is similar to the two criteria above.

-

For sentence x from either domain, the Euclidean distance between the original representation and the hidden states of it being projected to the other domain then projected back should be small.

-

Using the hidden states projected back, the probability of re-constructing x should be large.

These ideas are amazingly simple, but they turn out to be quite effective. The only weakness of this paper is that its writing is a little bit confusing. They could have presented a little more intuition before throwing out lots of equations.

Overall Recommendation

- 5: Transformative: This paper is likely to change our field. It should be considered for a best paper award.

- 4.5: Exciting: It changed my thinking on this topic. I would fight for it to be accepted.

- 4: Strong: I learned a lot from it. I would like to see it accepted.

- 3.5: Leaning positive: It can be accepted more or less in its current form. However, the work it describes is not particularly exciting and/or inspiring, so it will not be a big loss if people don’t see it in this conference.

- 3: Ambivalent: It has merits (e.g., it reports state-of-the-art results, the idea is nice), but there are key weaknesses (e.g., I didn’t learn much from it, evaluation is not convincing, it describes incremental work). I believe it can significantly benefit from another round of revision, but I won’t object to accepting it if my co-reviewers are willing to champion it.

- 2.5: Leaning negative: I am leaning towards rejection, but I can be persuaded if my co-reviewers think otherwise.

- 2: Mediocre: I would rather not see it in the conference.

- 1.5: Weak: I am pretty confident that it should be rejected.

- 1: Poor: I would fight to have it rejected.