简单对比了几个开源的NLP工具,决定使用hanlp作为今后工作上的主力工具,但是大神出版的《NLP入门》中的代码已不适用于2.1版本了。求尽快出新书。

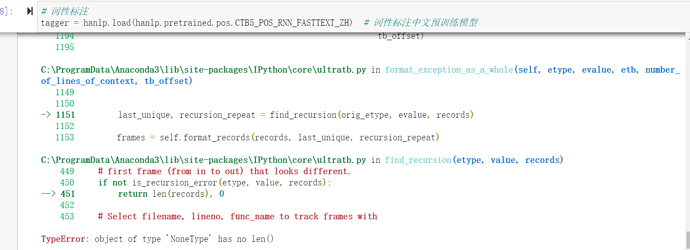

另外爆个问题需要解决,在导入个别预处理模型时失败:

本地win10系统-1809,Thinkpad-X13-i5,python3.8.5,并且已安装hanlp[full]

Failed to load https://file.hankcs.com/hanlp/pos/ctb5_pos_rnn_fasttext_20191230_202639.zip. See traceback below:

================================ERROR LOG BEGINS================================

Traceback (most recent call last):

File “C:\ProgramData\Anaconda3\lib\site-packages\hanlp\utils\component_util.py”, line 81, in load_from_meta_file

obj.load(save_dir, verbose=verbose, **kwargs)

File “C:\ProgramData\Anaconda3\lib\site-packages\hanlp\common\keras_component.py”, line 214, in load

self.build(**merge_dict(self.config, training=False, logger=logger, **kwargs, overwrite=True, inplace=True))

File “C:\ProgramData\Anaconda3\lib\site-packages\hanlp\common\keras_component.py”, line 224, in build

self.model = self.build_model(**merge_dict(self.config, training=kwargs.get(‘training’, None),

File “C:\ProgramData\Anaconda3\lib\site-packages\hanlp\components\taggers\rnn_tagger_tf.py”, line 35, in build_model

embeddings = build_embedding(embeddings, self.transform.word_vocab, self.transform)

File “C:\ProgramData\Anaconda3\lib\site-packages\hanlp\layers\embeddings\util_tf.py”, line 44, in build_embedding

layer: tf.keras.layers.Embedding = tf.keras.utils.deserialize_keras_object(embeddings)

File “C:\Users\李泽华\AppData\Roaming\Python\Python38\site-packages\tensorflow\python\keras\utils\generic_utils.py”, line 360, in deserialize_keras_object

return cls.from_config(cls_config)

File “C:\Users\李泽华\AppData\Roaming\Python\Python38\site-packages\tensorflow\python\keras\engine\base_layer.py”, line 697, in from_config

return cls(**config)

File “C:\ProgramData\Anaconda3\lib\site-packages\hanlp\layers\embeddings\fast_text_tf.py”, line 29, in init

self.model = fasttext.load_model(filepath)

File “C:\ProgramData\Anaconda3\lib\site-packages\fasttext\FastText.py”, line 350, in load_model

return _FastText(model_path=path)

File “C:\ProgramData\Anaconda3\lib\site-packages\fasttext\FastText.py”, line 43, in init

self.f.loadModel(model_path)

ValueError: C:\Users\李泽华\AppData\Roaming\hanlp\thirdparty\dl.fbaipublicfiles.com\fasttext\vectors-wiki\wiki.zh\wiki.zh.bin cannot be opened for loading!

=================================ERROR LOG ENDS=================================

If the problem still persists, please submit an issue to https://github.com/hankcs/HanLP/issues

When reporting an issue, make sure to paste the FULL ERROR LOG above.

ERROR:root:Internal Python error in the inspect module.

Below is the traceback from this internal error.

Traceback (most recent call last):

File “C:\ProgramData\Anaconda3\lib\site-packages\hanlp\utils\component_util.py”, line 81, in load_from_meta_file

obj.load(save_dir, verbose=verbose, **kwargs)

File “C:\ProgramData\Anaconda3\lib\site-packages\hanlp\common\keras_component.py”, line 214, in load

self.build(**merge_dict(self.config, training=False, logger=logger, **kwargs, overwrite=True, inplace=True))

File “C:\ProgramData\Anaconda3\lib\site-packages\hanlp\common\keras_component.py”, line 224, in build

self.model = self.build_model(**merge_dict(self.config, training=kwargs.get(‘training’, None),

File “C:\ProgramData\Anaconda3\lib\site-packages\hanlp\components\taggers\rnn_tagger_tf.py”, line 35, in build_model

embeddings = build_embedding(embeddings, self.transform.word_vocab, self.transform)

File “C:\ProgramData\Anaconda3\lib\site-packages\hanlp\layers\embeddings\util_tf.py”, line 44, in build_embedding

layer: tf.keras.layers.Embedding = tf.keras.utils.deserialize_keras_object(embeddings)

File “C:\Users\李泽华\AppData\Roaming\Python\Python38\site-packages\tensorflow\python\keras\utils\generic_utils.py”, line 360, in deserialize_keras_object

return cls.from_config(cls_config)

File “C:\Users\李泽华\AppData\Roaming\Python\Python38\site-packages\tensorflow\python\keras\engine\base_layer.py”, line 697, in from_config

return cls(**config)

File “C:\ProgramData\Anaconda3\lib\site-packages\hanlp\layers\embeddings\fast_text_tf.py”, line 29, in init

self.model = fasttext.load_model(filepath)

File “C:\ProgramData\Anaconda3\lib\site-packages\fasttext\FastText.py”, line 350, in load_model

return _FastText(model_path=path)

File “C:\ProgramData\Anaconda3\lib\site-packages\fasttext\FastText.py”, line 43, in init

self.f.loadModel(model_path)

ValueError: C:\Users\李泽华\AppData\Roaming\hanlp\thirdparty\dl.fbaipublicfiles.com\fasttext\vectors-wiki\wiki.zh\wiki.zh.bin cannot be opened for loading!

During handling of the above exception, another exception occurred:

Traceback (most recent call last):

File “C:\ProgramData\Anaconda3\lib\site-packages\IPython\core\interactiveshell.py”, line 3418, in run_code

exec(code_obj, self.user_global_ns, self.user_ns)

File “”, line 2, in

tagger = hanlp.load(hanlp.pretrained.pos.CTB5_POS_RNN_FASTTEXT_ZH) # 词性标注中文预训练模型

File “C:\ProgramData\Anaconda3\lib\site-packages\hanlp_init_.py”, line 43, in load

return load_from_meta_file(save_dir, ‘meta.json’, verbose=verbose, **kwargs)

File “C:\ProgramData\Anaconda3\lib\site-packages\hanlp\utils\component_util.py”, line 114, in load_from_meta_file

exit(1)

SystemExit: 1

During handling of the above exception, another exception occurred:

Traceback (most recent call last):

File “C:\ProgramData\Anaconda3\lib\site-packages\IPython\core\ultratb.py”, line 1170, in get_records

return _fixed_getinnerframes(etb, number_of_lines_of_context, tb_offset)

File “C:\ProgramData\Anaconda3\lib\site-packages\IPython\core\ultratb.py”, line 316, in wrapped

return f(*args, **kwargs)

File “C:\ProgramData\Anaconda3\lib\site-packages\IPython\core\ultratb.py”, line 350, in _fixed_getinnerframes

records = fix_frame_records_filenames(inspect.getinnerframes(etb, context))

File “C:\ProgramData\Anaconda3\lib\inspect.py”, line 1503, in getinnerframes

frameinfo = (tb.tb_frame,) + getframeinfo(tb, context)

AttributeError: ‘tuple’ object has no attribute ‘tb_frame’

ValueError Traceback (most recent call last)

C:\ProgramData\Anaconda3\lib\site-packages\hanlp\utils\component_util.py in load_from_meta_file(save_dir, meta_filename, transform_only, verbose, **kwargs)

80 if os.path.isfile(os.path.join(save_dir, ‘config.json’)):

—> 81 obj.load(save_dir, verbose=verbose, **kwargs)

82 else:

C:\ProgramData\Anaconda3\lib\site-packages\hanlp\common\keras_component.py in load(self, save_dir, logger, **kwargs)

213 self.load_vocabs(save_dir)

–> 214 self.build(**merge_dict(self.config, training=False, logger=logger, **kwargs, overwrite=True, inplace=True))

215 self.load_weights(save_dir, **kwargs)

C:\ProgramData\Anaconda3\lib\site-packages\hanlp\common\keras_component.py in build(self, logger, **kwargs)

223 self.transform.build_config()

–> 224 self.model = self.build_model(**merge_dict(self.config, training=kwargs.get(‘training’, None),

225 loss=kwargs.get(‘loss’, None)))

C:\ProgramData\Anaconda3\lib\site-packages\hanlp\components\taggers\rnn_tagger_tf.py in build_model(self, embeddings, embedding_trainable, rnn_input_dropout, rnn_output_dropout, rnn_units, loss, **kwargs)

34 model = tf.keras.Sequential()

—> 35 embeddings = build_embedding(embeddings, self.transform.word_vocab, self.transform)

36 model.add(embeddings)

C:\ProgramData\Anaconda3\lib\site-packages\hanlp\layers\embeddings\util_tf.py in build_embedding(embeddings, word_vocab, transform)

43 transform.map_x = False

—> 44 layer: tf.keras.layers.Embedding = tf.keras.utils.deserialize_keras_object(embeddings)

45 # Embedding specific configuration

~\AppData\Roaming\Python\Python38\site-packages\tensorflow\python\keras\utils\generic_utils.py in deserialize_keras_object(identifier, module_objects, custom_objects, printable_module_name)

359 with CustomObjectScope(custom_objects):

–> 360 return cls.from_config(cls_config)

361 else:

~\AppData\Roaming\Python\Python38\site-packages\tensorflow\python\keras\engine\base_layer.py in from_config(cls, config)

696 “”"

–> 697 return cls(**config)

698

C:\ProgramData\Anaconda3\lib\site-packages\hanlp\layers\embeddings\fast_text_tf.py in init(self, filepath, padding, name, **kwargs)

28 with stdout_redirected(to=os.devnull, stdout=sys.stderr):

—> 29 self.model = fasttext.load_model(filepath)

30 kwargs.pop(‘input_dim’, None)

C:\ProgramData\Anaconda3\lib\site-packages\fasttext\FastText.py in load_model(path)

349 eprint(“Warning : load_model does not return WordVectorModel or SupervisedModel any more, but a FastText object which is very similar.”)

–> 350 return _FastText(model_path=path)

351

C:\ProgramData\Anaconda3\lib\site-packages\fasttext\FastText.py in init(self, model_path, args)

42 if model_path is not None:

—> 43 self.f.loadModel(model_path)

44 self._words = None

ValueError: C:\Users\李泽华\AppData\Roaming\hanlp\thirdparty\dl.fbaipublicfiles.com\fasttext\vectors-wiki\wiki.zh\wiki.zh.bin cannot be opened for loading!

During handling of the above exception, another exception occurred:

SystemExit Traceback (most recent call last)

[… skipping hidden 1 frame]

in

1 # 词性标注

----> 2 tagger = hanlp.load(hanlp.pretrained.pos.CTB5_POS_RNN_FASTTEXT_ZH) # 词性标注中文预训练模型

C:\ProgramData\Anaconda3\lib\site-packages\hanlp_init_.py in load(save_dir, verbose, **kwargs)

42 verbose = HANLP_VERBOSE

—> 43 return load_from_meta_file(save_dir, ‘meta.json’, verbose=verbose, **kwargs)

44

C:\ProgramData\Anaconda3\lib\site-packages\hanlp\utils\component_util.py in load_from_meta_file(save_dir, meta_filename, transform_only, verbose, **kwargs)

113 ‘When reporting an issue, make sure to paste the FULL ERROR LOG above.’)

–> 114 exit(1)

115

SystemExit: 1

During handling of the above exception, another exception occurred:

TypeError Traceback (most recent call last)

[… skipping hidden 1 frame]

C:\ProgramData\Anaconda3\lib\site-packages\IPython\core\interactiveshell.py in showtraceback(self, exc_tuple, filename, tb_offset, exception_only, running_compiled_code)

2036 stb = ['An exception has occurred, use %tb to see ’

2037 ‘the full traceback.\n’]

-> 2038 stb.extend(self.InteractiveTB.get_exception_only(etype,

2039 value))

2040 else:

C:\ProgramData\Anaconda3\lib\site-packages\IPython\core\ultratb.py in get_exception_only(self, etype, value)

821 value : exception value

822 “”"

–> 823 return ListTB.structured_traceback(self, etype, value)

824

825 def show_exception_only(self, etype, evalue):

C:\ProgramData\Anaconda3\lib\site-packages\IPython\core\ultratb.py in structured_traceback(self, etype, evalue, etb, tb_offset, context)

696 chained_exceptions_tb_offset = 0

697 out_list = (

–> 698 self.structured_traceback(

699 etype, evalue, (etb, chained_exc_ids),

700 chained_exceptions_tb_offset, context)

C:\ProgramData\Anaconda3\lib\site-packages\IPython\core\ultratb.py in structured_traceback(self, etype, value, tb, tb_offset, number_of_lines_of_context)

1434 else:

1435 self.tb = tb

-> 1436 return FormattedTB.structured_traceback(

1437 self, etype, value, tb, tb_offset, number_of_lines_of_context)

1438

C:\ProgramData\Anaconda3\lib\site-packages\IPython\core\ultratb.py in structured_traceback(self, etype, value, tb, tb_offset, number_of_lines_of_context)

1334 if mode in self.verbose_modes:

1335 # Verbose modes need a full traceback

-> 1336 return VerboseTB.structured_traceback(

1337 self, etype, value, tb, tb_offset, number_of_lines_of_context

1338 )

C:\ProgramData\Anaconda3\lib\site-packages\IPython\core\ultratb.py in structured_traceback(self, etype, evalue, etb, tb_offset, number_of_lines_of_context)

1191 “”“Return a nice text document describing the traceback.”""

1192

-> 1193 formatted_exception = self.format_exception_as_a_whole(etype, evalue, etb, number_of_lines_of_context,

1194 tb_offset)

1195

C:\ProgramData\Anaconda3\lib\site-packages\IPython\core\ultratb.py in format_exception_as_a_whole(self, etype, evalue, etb, number_of_lines_of_context, tb_offset)

1149

1150

-> 1151 last_unique, recursion_repeat = find_recursion(orig_etype, evalue, records)

1152

1153 frames = self.format_records(records, last_unique, recursion_repeat)

C:\ProgramData\Anaconda3\lib\site-packages\IPython\core\ultratb.py in find_recursion(etype, value, records)

449 # first frame (from in to out) that looks different.

450 if not is_recursion_error(etype, value, records):

–> 451 return len(records), 0

452

453 # Select filename, lineno, func_name to track frames with

TypeError: object of type ‘NoneType’ has no len()

感谢选择HanLP,我们内部也有与主流开源项目的深入评测,HanLP的准确率和速度的确是领先的。

感谢支持,如果有机会写的话,下一本书希望写得更好。至于时机,暂时还不成熟。参考

经过测试,不存在问题,参考这个notebook:https://play.hanlp.ml/run/hanlp-fasttext-pos

你可能用了旧版本或旧模型。

非常感谢大神百忙之中的回复,对于新手来说,2.1版本的使用示例确实比较少,比如如何自己做文本标注扔给模型训练之类的高级用法,我是刚入门的小白,是不是应该先把1.0版的《NLP入门》看完然后研究git中2.1的demo比较好。

的确是的,基本概念是通用的,demo也做成了能直接跑的程度。

再深入下去,2.x整体设计和模型原理都要先进很多。作为一个开箱即用的项目,把应用接口做好已经很费力了,再去解释设计思想的话,肯定不是个简单事,需要受众具备一定的基础。至于每个模型的原理,得把论文过一遍才能搞清楚。