Since the debut of BERT, lots of efforts have been made to revise the methods of pre-training. Among them, this paper might be the most extensive and comprehensive one. The authors from Google spent lots of dollars to experiment many pre-training options then contributed their empirical findings in this paper, which is definitely worth reading if you want to pre-train a transformer from scratch.

Exploration

-

Architectures

- Encoder-decoder with Denoising objective performed the best

-

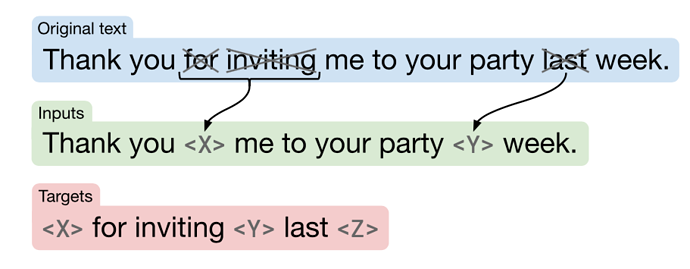

Unsupervised Objectives

- BERT-style wins

- Corruption rate 15%

- Corrupts spans with average length of 3

- BERT-style wins

-

Pre-training Data set

- Experiments are done by training 2^{35} tokens so some corpus might not be trained many times.

- Domain

- 17GB High rates website text performs comparably well with or even better than the 745G C4.

- 20GB Wikipedia + Toronto Books Corpus ranks the top on SQuAD and SGLUE.

- Data set size

- By not repeating but training the same number of steps, the full dataset set ultimately wins

-

Training Strategy

- Adapter layers perform the worst

- Gradual unfreezing can hardly yield comparable results to fine-tuning

- multi- task learning

- multi-task training underperforms pre-training followed by fine-tuning on most tasks

- Multi-task pre-training + fine-tuning improves some MT tasks

-

Scaling

- Increasing model size and training steps together works the best

- Increasing batch size does not improve the most

Summary

T5 applies the following settings:

-

Objective

- use a mean span length of 3 and corrupt 15% of the original sequence

-

Longer training

- We therefore pre-train our models for 1 million steps on a batch size of 211 sequences of length 512, corresponding to a total of about 1 trillion pre-training tokens

- repetition could be harmful, we opted instead to continue using the C4 data set.

-

Model sizes

- 11B for the largest model (65,536 hidden size with 128-headed attention)

-

Multi-task pre-training

- pre-training on a multi-task mixture of unsupervised and supervised tasks before fine-tuning worked as well as pre-training on the unsupervised task alone

- It also has the practical benefit of being able to monitor “downstream” performance for the entire duration of training, rather than just during fine-tuning.

-

Beam search

- For tasks with long output sequences, we found improved performance from using beam search

Rating

- 5: Transformative: This paper is likely to change our field. It should be considered for a best paper award.

- 4.5: Exciting: It changed my thinking on this topic. I would fight for it to be accepted.

- 4: Strong: I learned a lot from it. I would like to see it accepted.

- 3.5: Leaning positive: It can be accepted more or less in its current form. However, the work it describes is not particularly exciting and/or inspiring, so it will not be a big loss if people don’t see it in this conference.

- 3: Ambivalent: It has merits (e.g., it reports state-of-the-art results, the idea is nice), but there are key weaknesses (e.g., I didn’t learn much from it, evaluation is not convincing, it describes incremental work). I believe it can significantly benefit from another round of revision, but I won’t object to accepting it if my co-reviewers are willing to champion it.

- 2.5: Leaning negative: I am leaning towards rejection, but I can be persuaded if my co-reviewers think otherwise.

- 2: Mediocre: I would rather not see it in the conference.

- 1.5: Weak: I am pretty confident that it should be rejected.

- 1: Poor: I would fight to have it rejected.

0 投票者