Failed to load https://file.hankcs.com/hanlp/mtl/ud_ontonotes_tok_pos_lem_fea_ner_srl_dep_sdp_con_xlm_base_20210114_005825.zip. See traceback below:

================================ERROR LOG BEGINS================================

Traceback (most recent call last):

File “F:\jxnlp\jxnlp-sdk\hanlp\utils\component_util.py”, line 81, in load_from_meta_file

obj.load(save_dir, verbose=verbose, **kwargs)

File “F:\jxnlp\jxnlp-sdk\hanlp\common\torch_component.py”, line 173, in load

self.load_config(save_dir, **kwargs)

File “F:\jxnlp\jxnlp-sdk\hanlp\common\torch_component.py”, line 125, in load_config

self.config[k] = Configurable.from_config(v)

File “D:\ProgramData\Anaconda3\envs\ownerhanlp\lib\site-packages\hanlp_common\configurable.py”, line 30, in from_config

return cls(**deserialized_config)

File “F:\jxnlp\jxnlp-sdk\hanlp\layers\embeddings\contextual_word_embedding.py”, line 141, in init

self._transformer_tokenizer = TransformerEncoder.build_transformer_tokenizer(self.transformer,

File “F:\jxnlp\jxnlp-sdk\hanlp\layers\transformers\encoder.py”, line 146, in build_transformer_tokenizer

return cls.from_pretrained(transformer, use_fast=use_fast, do_basic_tokenize=do_basic_tokenize,

File “D:\ProgramData\Anaconda3\envs\ownerhanlp\lib\site-packages\transformers\models\auto\tokenization_auto.py”, line 435, in from_pretrained

return tokenizer_class_fast.from_pretrained(pretrained_model_name_or_path, *inputs, **kwargs)

File “D:\ProgramData\Anaconda3\envs\ownerhanlp\lib\site-packages\transformers\tokenization_utils_base.py”, line 1719, in from_pretrained

return cls._from_pretrained(

File “D:\ProgramData\Anaconda3\envs\ownerhanlp\lib\site-packages\transformers\tokenization_utils_base.py”, line 1791, in _from_pretrained

tokenizer = cls(*init_inputs, **init_kwargs)

File “D:\ProgramData\Anaconda3\envs\ownerhanlp\lib\site-packages\transformers\models\xlm_roberta\tokenization_xlm_roberta_fast.py”, line 134, in init

super().init(

File “D:\ProgramData\Anaconda3\envs\ownerhanlp\lib\site-packages\transformers\tokenization_utils_fast.py”, line 96, in init

fast_tokenizer = TokenizerFast.from_file(fast_tokenizer_file)

Exception: EOF while parsing a value at line 1 column 2735847

=================================ERROR LOG ENDS=================================

Please upgrade hanlp with:

pip install --upgrade hanlp

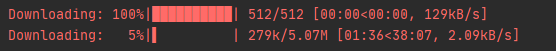

偶然查到有人也遇到这个问题,好像是transformers缓存导致的,在cache删除了这个缓存文件夹,但是重新运行后下载这个缓存太慢了,经常卡在10%左右,之后好像就跳过下载,运行错误了。有什么方法能提高这里的下载速度吗