This paper presents a practical method to enable large scale open-domain QA through pre-computed sparse index which can be implemented using Lucene.

They formulate document based QA as follows:

Let q be the input question, and A=\{(a, c)\} be a set of candidate answers. Each candidate answer is a tuple (a, c) where a is the answer text and c is context information about a. The objective is to find model parameter \theta that rank the correct answer as high as possible, .i.e:

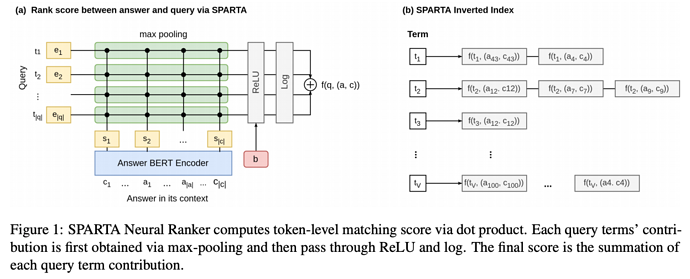

Then they embed query and answer separately using non-contextualized and contextualized embeddings.

Then dot product followed by max-pooling and ReLU is used to compute the matching score.

As query embeddings are independent to each other, their relevance to each candidate answer can pre-computed, which allows Lucene to index them.

Comments

- It’s hard to believe that such a simple method performed so well. But if it’s true then this is a good paper.

- 5: Transformative: This paper is likely to change our field. It should be considered for a best paper award.

- 4.5: Exciting: It changed my thinking on this topic. I would fight for it to be accepted.

- 4: Strong: I learned a lot from it. I would like to see it accepted.

- 3.5: Leaning positive: It can be accepted more or less in its current form. However, the work it describes is not particularly exciting and/or inspiring, so it will not be a big loss if people don’t see it in this conference.

- 3: Ambivalent: It has merits (e.g., it reports state-of-the-art results, the idea is nice), but there are key weaknesses (e.g., I didn’t learn much from it, evaluation is not convincing, it describes incremental work). I believe it can significantly benefit from another round of revision, but I won’t object to accepting it if my co-reviewers are willing to champion it.

- 2.5: Leaning negative: I am leaning towards rejection, but I can be persuaded if my co-reviewers think otherwise.

- 2: Mediocre: I would rather not see it in the conference.

- 1.5: Weak: I am pretty confident that it should be rejected.

- 1: Poor: I would fight to have it rejected.

0 投票者